How Do I Tell if Signlab Family Is on My Program

by William W Wold

I wrote a programming language. Hither's how y'all tin, too.

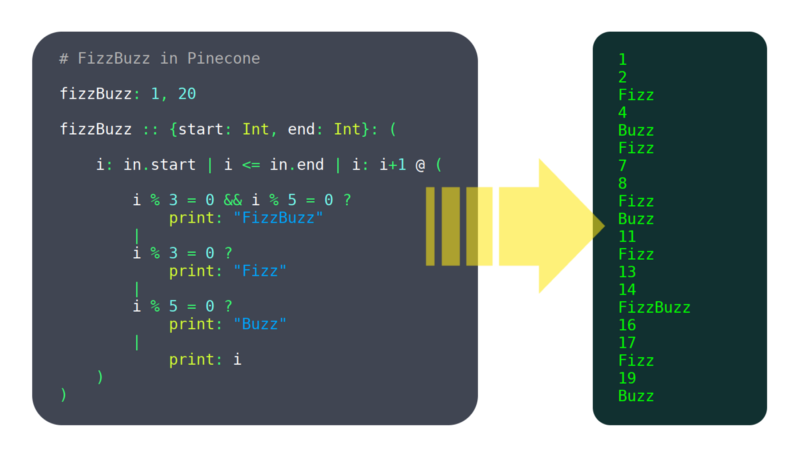

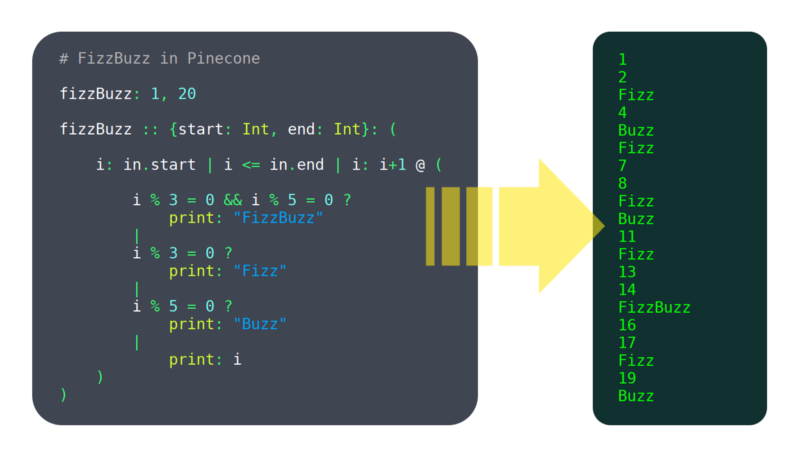

Over the past 6 months, I've been working on a programming language called Pinecone. I wouldn't call it mature yet, but it already has enough features working to be usable, such as:

- variables

- functions

- user defined structures

If you're interested in it, bank check out Pinecone's landing page or its GitHub repo.

I'm not an skillful. When I started this project, I had no clue what I was doing, and I nonetheless don't. I've taken zilch classes on linguistic communication creation, read simply a bit about it online, and did not follow much of the advice I accept been given.

And yet, I still made a completely new language. And information technology works. And so I must be doing something right.

In this post, I'll dive under the hood and bear witness you the pipeline Pinecone (and other programming languages) employ to turn source code into magic.

I'll also bear on some of the tradeoffs I've had make, and why I made the decisions I did.

This is by no means a consummate tutorial on writing a programming language, but it'south a adept starting indicate if y'all're curious about language development.

Getting Started

"I have absolutely no thought where I would even start" is something I hear a lot when I tell other developers I'chiliad writing a language. In case that'due south your reaction, I'll at present get through some initial decisions that are made and steps that are taken when starting any new language.

Compiled vs Interpreted

At that place are two major types of languages: compiled and interpreted:

- A compiler figures out everything a program will exercise, turns it into "machine code" (a format the computer can run actually fast), then saves that to be executed later.

- An interpreter steps through the source code line by line, figuring out what it's doing as it goes.

Technically any language could be compiled or interpreted, but one or the other ordinarily makes more sense for a specific language. Generally, interpreting tends to be more flexible, while compiling tends to have higher performance. Simply this is only scratching the surface of a very complex topic.

I highly value performance, and I saw a lack of programming languages that are both high functioning and simplicity-oriented, then I went with compiled for Pinecone.

This was an important decision to make early on, because a lot of linguistic communication design decisions are affected by it (for example, static typing is a big benefit to compiled languages, but not so much for interpreted ones).

Despite the fact that Pinecone was designed with compiling in heed, information technology does take a fully functional interpreter which was the merely fashion to run information technology for a while. There are a number of reasons for this, which I will explain subsequently.

Choosing a Language

I know information technology'due south a bit meta, merely a programming linguistic communication is itself a program, and thus you demand to write it in a language. I chose C++ considering of its performance and big feature set. Also, I actually practise bask working in C++.

If yous are writing an interpreted linguistic communication, it makes a lot of sense to write it in a compiled 1 (like C, C++ or Swift) considering the functioning lost in the language of your interpreter and the interpreter that is interpreting your interpreter will compound.

If you plan to compile, a slower language (like Python or JavaScript) is more than acceptable. Compile time may exist bad, but in my opinion that isn't nearly every bit big a deal as bad run fourth dimension.

High Level Pattern

A programming language is generally structured as a pipeline. That is, information technology has several stages. Each stage has information formatted in a specific, well divers way. It also has functions to transform data from each phase to the next.

The showtime stage is a string containing the entire input source file. The final stage is something that can exist run. This will all become clear as nosotros go through the Pinecone pipeline stride by step.

Lexing

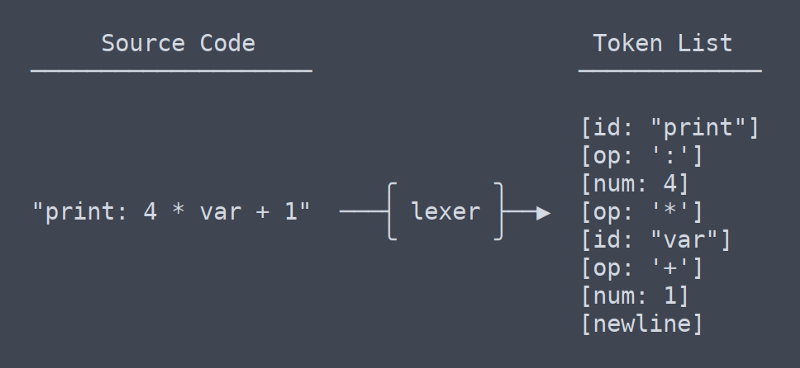

The first step in most programming languages is lexing, or tokenizing. 'Lex' is brusque for lexical analysis, a very fancy word for splitting a bunch of text into tokens. The word 'tokenizer' makes a lot more sense, but 'lexer' is so much fun to say that I utilise it anyhow.

Tokens

A token is a small unit of a linguistic communication. A token might exist a variable or function proper noun (AKA an identifier), an operator or a number.

Job of the Lexer

The lexer is supposed to have in a cord containing an entire files worth of source code and spit out a list containing every token.

Future stages of the pipeline will not refer back to the original source code, so the lexer must produce all the information needed by them. The reason for this relatively strict pipeline format is that the lexer may exercise tasks such as removing comments or detecting if something is a number or identifier. You desire to keep that logic locked inside the lexer, both so you don't take to think nearly these rules when writing the rest of the language, so you can change this blazon of syntax all in one place.

Flex

The day I started the language, the first thing I wrote was a unproblematic lexer. Soon afterward, I started learning about tools that would supposedly make lexing simpler, and less buggy.

The predominant such tool is Flex, a program that generates lexers. You give it a file which has a special syntax to describe the language's grammar. From that it generates a C program which lexes a string and produces the desired output.

My Decision

I opted to go on the lexer I wrote for the fourth dimension existence. In the terminate, I didn't meet significant benefits of using Flex, at least not enough to justify calculation a dependency and complicating the build process.

My lexer is only a few hundred lines long, and rarely gives me any problem. Rolling my own lexer also gives me more flexibility, such as the ability to add an operator to the language without editing multiple files.

Parsing

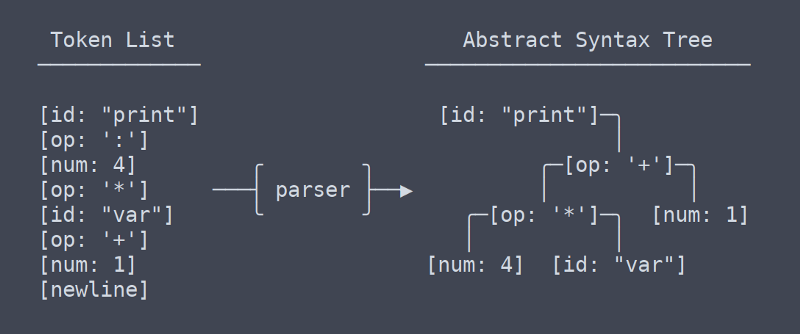

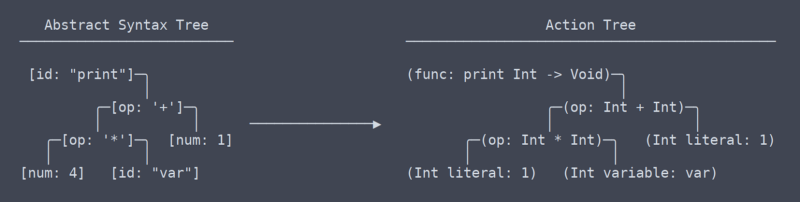

The 2d phase of the pipeline is the parser. The parser turns a list of tokens into a tree of nodes. A tree used for storing this type of data is known as an Abstract Syntax Tree, or AST. At least in Pinecone, the AST does not have whatsoever info almost types or which identifiers are which. It is simply structured tokens.

Parser Duties

The parser adds construction to to the ordered list of tokens the lexer produces. To stop ambiguities, the parser must have into account parenthesis and the order of operations. Simply parsing operators isn't terribly hard, but as more language constructs become added, parsing can go very circuitous.

Bison

Again, in that location was a decision to make involving a tertiary party library. The predominant parsing library is Bison. Bison works a lot like Flex. You write a file in a custom format that stores the grammar data, then Bison uses that to generate a C program that will do your parsing. I did not choose to use Bison.

Why Custom Is Amend

With the lexer, the conclusion to use my own lawmaking was fairly obvious. A lexer is such a trivial plan that not writing my own felt almost as empty-headed as not writing my own 'left-pad'.

With the parser, it's a unlike matter. My Pinecone parser is currently 750 lines long, and I've written three of them because the first two were trash.

I originally made my conclusion for a number of reasons, and while it hasn't gone completely smoothly, virtually of them hold true. The major ones are as follows:

- Minimize context switching in workflow: context switching between C++ and Pinecone is bad enough without throwing in Bison's grammar grammar

- Keep build unproblematic: every time the grammar changes Bison has to be run earlier the build. This tin exist automatic but it becomes a pain when switching between build systems.

- I like edifice cool shit: I didn't brand Pinecone because I thought information technology would be easy, so why would I delegate a central office when I could do it myself? A custom parser may not be lilliputian, but information technology is completely achievable.

In the outset I wasn't completely sure if I was going down a viable path, merely I was given confidence by what Walter Bright (a programmer on an early version of C++, and the creator of the D language) had to say on the topic:

"Somewhat more controversial, I wouldn't bother wasting time with lexer or parser generators and other so-called "compiler compilers." They're a waste product of time. Writing a lexer and parser is a tiny percentage of the chore of writing a compiler. Using a generator will have up near equally much time equally writing ane by hand, and it volition marry you to the generator (which matters when porting the compiler to a new platform). And generators also accept the unfortunate reputation of emitting lousy error letters."

Action Tree

We take now left the the surface area of common, universal terms, or at least I don't know what the terms are anymore. From my understanding, what I call the 'action tree' is most alike to LLVM's IR (intermediate representation).

In that location is a subtle but very significant difference between the action tree and the abstract syntax tree. It took me quite a while to figure out that at that place even should be a divergence between them (which contributed to the need for rewrites of the parser).

Action Tree vs AST

Put only, the action tree is the AST with context. That context is info such as what type a function returns, or that 2 places in which a variable is used are in fact using the aforementioned variable. Because it needs to figure out and remember all this context, the code that generates the action tree needs lots of namespace lookup tables and other thingamabobs.

Running the Action Tree

Once nosotros have the action tree, running the code is easy. Each activeness node has a function 'execute' which takes some input, does any the action should (including possibly calling sub action) and returns the activity's output. This is the interpreter in action.

Compiling Options

"But wait!" I hear you say, "isn't Pinecone supposed to by compiled?" Yep, it is. But compiling is harder than interpreting. There are a few possible approaches.

Build My Own Compiler

This sounded similar a good idea to me at offset. I practise love making things myself, and I've been itching for an excuse to become good at associates.

Unfortunately, writing a portable compiler is non as like shooting fish in a barrel as writing some machine code for each language element. Because of the number of architectures and operating systems, information technology is impractical for any private to write a cross platform compiler backend.

Even the teams behind Swift, Rust and Clang don't want to bother with information technology all on their ain, so instead they all use…

LLVM

LLVM is a collection of compiler tools. Information technology's basically a library that will plow your language into a compiled executable binary. It seemed similar the perfect choice, so I jumped correct in. Sadly I didn't check how deep the h2o was and I immediately drowned.

LLVM, while not associates language difficult, is gigantic complex library hard. Information technology's not impossible to utilize, and they accept practiced tutorials, but I realized I would have to get some do before I was ready to fully implement a Pinecone compiler with information technology.

Transpiling

I wanted some sort of compiled Pinecone and I wanted it fast, so I turned to one method I knew I could make work: transpiling.

I wrote a Pinecone to C++ transpiler, and added the ability to automatically compile the output source with GCC. This currently works for almost all Pinecone programs (though there are a few edge cases that break it). It is not a particularly portable or scalable solution, but it works for the fourth dimension being.

Futurity

Bold I continue to develop Pinecone, Information technology will get LLVM compiling support sooner or later. I suspect no mater how much I piece of work on it, the transpiler will never be completely stable and the benefits of LLVM are numerous. It's just a matter of when I have time to make some sample projects in LLVM and become the hang of it.

Until and then, the interpreter is great for trivial programs and C++ transpiling works for most things that demand more than operation.

Conclusion

I hope I've made programming languages a little less mysterious for you. If you exercise want to make one yourself, I highly recommend information technology. There are a ton of implementation details to figure out but the outline here should be enough to become you going.

Hither is my high level advice for getting started (remember, I don't actually know what I'm doing, so take it with a grain of salt):

- If in doubt, become interpreted. Interpreted languages are generally easier design, build and acquire. I'm not discouraging yous from writing a compiled one if y'all know that'south what y'all want to practise, just if you're on the contend, I would get interpreted.

- When it comes to lexers and parsers, do whatever you want. In that location are valid arguments for and confronting writing your ain. In the finish, if you think out your design and implement everything in a sensible mode, it doesn't really affair.

- Larn from the pipeline I concluded upwardly with. A lot of trial and error went into designing the pipeline I have now. I have attempted eliminating ASTs, ASTs that turn into actions trees in place, and other terrible ideas. This pipeline works, so don't change it unless you have a actually good idea.

- If you lot don't have the time or motivation to implement a complex general purpose language, try implementing an esoteric language such as Brainfuck. These interpreters can be as short equally a few hundred lines.

I have very few regrets when information technology comes to Pinecone development. I made a number of bad choices along the way, but I take rewritten most of the lawmaking affected past such mistakes.

Right at present, Pinecone is in a practiced enough state that it functions well and can be easily improved. Writing Pinecone has been a hugely educational and enjoyable feel for me, and it's simply getting started.

Learn to code for costless. freeCodeCamp's open up source curriculum has helped more than 40,000 people get jobs as developers. Get started

Source: https://www.freecodecamp.org/news/the-programming-language-pipeline-91d3f449c919/

0 Response to "How Do I Tell if Signlab Family Is on My Program"

إرسال تعليق